3,500+ smart, curious folks have subscribed to the growth catalyst newsletter so far. If you are new here, receive the newsletter weekly in your email by subscribing 👇

If you aren’t familiar with the newsletter and online sessions, you can read about it here

In 2012, a Microsoft employee working on Bing had an idea about changing the way the search engine displayed ad headlines. Developing it wouldn’t require much effort—just a few days of an engineer’s time—but it was one of the hundreds of ideas proposed, and the program managers deemed it a low priority. So it didn’t get implemented for more than six months, until an engineer, who saw that the cost of writing the code for it would be small, launched a simple A/B test.

Within hours the new headline variation was producing abnormally high revenue, triggering a “too good to be true” alert. Usually, such alerts signal a bug, but not in this case. An analysis showed that the change had increased revenue by an astonishing 12%—which on an annual basis would come to more than $100 million in the US alone—without hurting any of the key user-experience metrics. It was the best revenue-generating idea in Bing’s history, but until the test was done, its value was under-appreciated.

The above anecdote appeared in the Oct’17 HBR issue in an essay written by a Microsoft employee and an HBS professor. I picked this particular anecdote because it defies a particular school of thought when it comes to a/b testing — the belief that making small changes can’t make a big difference for the product.

As the Bing example and numerous others show, small changes can make a big difference. This isn’t to say that we will always find big wins that way. We need to work on the bigger ones as well. So how do we get these ideas and identify which of them is going to work?

Let’s focus first on getting the ideas. If we get enough ideas, some of them are going to work.

Step 1 - Generate Ideas: Ask-Observe Framework

You can follow a simple framework to generate ideas. I call it the ask-observe framework.

Here is how it looks

Operators = {your team, competition, people who have solved a similar problem in the same or different domain, industry leaders who can reason from first principles}

You can do four things to generate ideas:

Ask the customers

Ask the operators

Observe the customers

Observe the operators

Let me explain them one by one

Ask the Customers

You can ask customers through various methods to validate hypotheses and generate insights.

On-site surveys: Some of the best insights come when you ask your users questions while they are using the product. A scalable way of doing this on a continuous basis is to add contextual surveys in your app/website as visitors browse around. For example, an on-site survey might ask visitors who have been on the same page for a while if there’s anything holding them back from making a purchase today. If so, what is it?

Customer interviews: Customer interviews are amazing. Nothing can replace getting on the phone and talking to your customers. Be it the problem definition, understanding motivations, or anything else, you can get to know everything through customer interviews.

Surveys: You can also do full-length surveys that go out to your TG. The full-length surveys should be defined differently for different TG. Focus on defining your customers, defining their problems, defining the concerns they had prior to purchasing, why they bought from you, and identifying words and phrases they use to describe your product.

Observe the Customers

Observing the customer - while they are using your app and even when they are off the app in their own world - is a good way to generate insights.

User testing. User testing is asking users to perform a few tasks on the app/website and recording the sessions for further reference. These sessions bring out a lot of usability issues and that’s why they are also known as usability tests. Steve Krug’s book Don’t Make me Think is a great primer to usability tests.

Analytics : This is where most teams spend their time looking for insights. I have covered in earlier posts on how to use segmentation and cohort analysis along with AARRR can help you uncover beautiful insights from data. Do note that data will mostly tell you about what is wrong. To understand why it’s wrong, you have to go to customers and ask/observe them through usability tests, surveys, interviews etc.

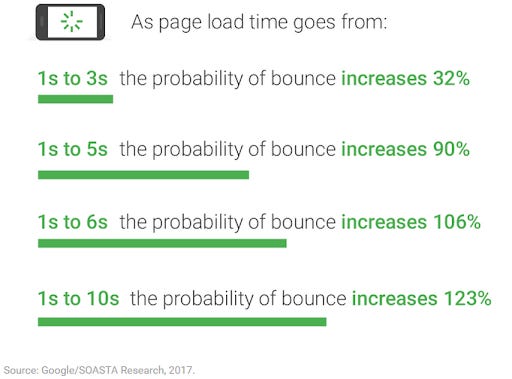

Technical analysis: Page speed matters a lot to most of your users. As Google published in 2017, bounce rate is impacted by page load on websites.

One should start by getting data around these page speed and latency across browsers and devices, and we will quickly find some areas of improvement. One false negative that happens with page speed and latencies is that sometimes, you may improve the average page speed, only to realize that it isn’t affecting bounce rate. The reason might be that it’s still bad enough for the cohort who were bouncing off earlier. So use segmentation and analytics wisely. To measure if technical improvements are making any difference, you should always go for an A/B test and not rely on absolute improvements in page speed/latency numbers.

Session replays: Session replays are similar to user testing, where you record sessions on your website and watch as your actual visitors navigate your site. What do they have trouble finding? Where do they get frustrated? Where do they seem confused? It’s a pretty good thing to do in early days. Checkout hotjar or crazyegg which help you do it if you haven’t. One thing to watchout here is the page load time of your website. The JS loading with these tools can create high page load time which can lead to high bounce rate. Be careful there.

User reviews: Look through user reviews from social media, play store, app store, etc. The easiest yet most obvious thing to miss. Replying on those reviews gives you a good way to staying connected with users.

User journeys of different personas: Your product is built for multiple personas. For example, the same landing page can’t cater to a teacher and students for an educational website even if both will type the same URL and come to your website. Look at how they are using the product. Understanding this will give you good ideas on how to improve the product for different personas.

Ask the Operators

Operators = {your team, competition, people who have solved a similar problem in the same or different domain, industry leaders who can reason from first principles}

Product walkthrough — You can review your product page by page with your team and identify where you can make the experience more relevant, clear, and value-adding—and where you can reduce friction. Add an hour to your calendar every couple of weeks to do so.

Brainstorming on questions — All the good hypotheses to test arises from the right questions. Spend a good time defining the problem statement and move on to ask the Who, What, Why, When, Where, How, How Much. Please avoid any discussion or answers.

Talk to other teams working on similar problems — If your growth is stalled, talk to a few senior leaders who led growth for years. They would show you a direction. Talk to other growth professionals on the phone on a regular basis. Exchange ideas, share experiments and discuss what worked, what didn’t, and why you think you got the results you did. Adapt ideas to your own product.

Observe the Experts

Observation would be mostly done on experts not directly accessible to you - your competition, industry leaders, etc.

Competitor products — Your competition is an expert you have to beat. Use competitor products, look through their updates and reviews. Always try to understand why they built something and whether something is an experiment or a full rollout. Don’t copy them blindly.

Product comparison — This is similar to product walkthrough. Everyone picks one or two products from your competitive and noncompetitive space that they use actively. Walkthrough their product flow and discuss what they do well, what they could do better, and how you can adapt it to your own product and audience.

World-class products — Study how world-class products are built. What changes are they making and why so? A lot of literature is available around top companies and products. As a vast majority of people use them, many of their product principles in some form can be applied to your own.

Communities — Join good online communities/newsletters around your industry. Producthunt, reddit, hackernews are some of the good communities in tech.

Study world-leading operators — You don’t have to reinvent the wheel around everything you do. You can avoid a lot of pain by observing how world-leading experts operate. This can be done by reading and listening to them. A lot of excellent operators don’t share their thoughts. You can start by following operators who share their thought like Lenny Rachitsky, Andrew Chen, Paul Graham, Ben Horowitz, Keith Rabois, etc. I have lists on my twitter account like this one - Best to Follow where I keep adding people in different areas.

Step 2 - Consolidate Ideas : Product Themes

By now, you have a list ideas. It’s time to consolidate them. And the best way to consolidate ideas is through product themes.

Examples of themes can be acquisition, activation, retention, revenue, referral, delight, engagement etc. Themes help you identify which of the problems are most hurting your user experience. For example, if your churn rate is low then you don't have much to gain by focusing on retention. If your bounce rate is high, you can focus on activation.

Pick few themes every quarter and work on them. In a particular quarter, you shouldn’t be working on more than 2-3 themes.

By the way, you can also pick the themes first and then generate ideas around this. Kind of moving from Step 2 to Step 1.

So now, you have themes and ideas. It’s time to prioritise the ideas.

Step 3 - Prioritize Ideas: RICE Framework

RICE is an acronym for the four factors used to evaluate each idea: reach (R), impact (I), confidence (C), and effort (E). Intercom talks widely about it.

Reach

Estimate how many people will be affected by the idea you are proposing.

Reach should also include the freuqency of usage. So if you have few ideas around payment, it will affect paying customers and # of transactions.

Impact

Estimate the impact on an individual person. This number multipled by reach gives you overall impact the idea may have on users/business.

The impact is difficult to measure precisely. So you can use a scale: 5 for “very high impact”, 4 for “high impact”, 3 for “medium”, 2 for “low”, 1 for “very low”.

Putting an impact numbers is an objective way of quantifying your gut feeling. Further, it helps you evaluate in future how ‘off’ were you from the real impact.

Confidence

Confidence answers whether the idea will work or not. Most of the startups fail because there is high enthusism about bad ideas. You can use a scale to rate confidence as well: 5 for “very high confidence”, 4 for “high”, 3 for “medium”, 2 for “low”, 1 for “very low”.

The confidence should come from the data/research you have for the idea. If you don’t have data/research to back it up - don’t put it in high confidence just because you are enthusiastic about it.

Effort

The final step is adding the effort from the team: product, design, and engineering.

The effort can be estimated as a number of “person-months” or “person-weeks”. A person month is the work that one team member can do in a month.

Now we calculate the prioritisation score for each idea and sort them out.

Score = Reach * Impact * Confidence/ Effort

Try it in some of your projects and see how it works :)

To summarise the post, the way to generate good ideas for experimentation is to

— Pick product themes

— Use the ask-observe framework to generate ideas

— Prioritise using the RICE framework

Quick Announcement: Last week, I came across a dilemma. I had the post ready but wasn’t happy with the quality of it. The key argument for writing a post every week is a story I read from the book Art and Fear.

The ceramics teacher announced on opening day that he was dividing the class into two groups. All those on the left side of the studio, he said, would be graded solely on the quantity of work they produced, all those on the right solely on its quality.

His procedure was simple: on the final day of class he would bring in his bathroom scales and weigh the work of the “quantity” group: fifty pound of pots rated an “A”, forty pounds a “B”, and so on. Those being graded on “quality”, however, needed to produce only one pot – albeit a perfect one – to get an “A”.

Well, came grading time and a curious fact emerged: the works of highest quality were all produced by the group being graded for quantity. It seems that while the “quantity” group was busily churning out piles of work – and learning from their mistakes – the “quality” group had sat theorizing about perfection, and in the end had little more to show for their efforts than grandiose theories and a pile of dead clay.

So writing and posting every week helps with the craft of writing :)

However, a key principle I want to hold on while writing is to deliver value in every post. Thousands of people reading it every week means hundreds of hours spend on these posts. The quality has to trump quantity now — so I have decided to write a post once every two weeks.

Give me a shoutout if you feel it’s a good decision by dropping a message on LinkedIn, Twitter or Email. Convince me otherwise if you feel so. Hope to get some compelling arguments for both sides :)

Next post, I will get into the detail of user research — one method that trumps all other ways of product development. Watch out for the post.

Sincerely,

Deepak

Once again a fabulous read.Liked the subtlety in conveying the most important message about Quantity & Quality.

Though writing every week or in two weeks should entirely be your call,but,I feel the very nature of discipline is to follow what you began with.

You are a focused and sorted writer so gap will be a major loss of 2 topics every month to Many Learner’s like me.

very impressive